Small Rust crates I (almost) always use

Posted on Tue 31 October 2017 in Code • Tagged with Rust, libraries • Leave a comment

Alternative clickbait title: My Little Crates: Rust is Magic :-)

Due to its relatively scant standard library, programming in Rust inevitably involves pulling in a good number of third-party dependencies.

Some of them deal with problems that are solved with built-ins

in languages that take a more “batteries included” approach.

A good example would be the Python’s re module,

whose moral equivalent in the Rust ecosystem is the regex crate.

Things like regular expressions, however, represent comparatively large problems. It isn’t very surprising that dedicated libraries exist to address them. It is less common for a language to offer small packages that target very specialized applications.

As in, one function/type/macro-kind of specialized, or perhaps only a little larger than that.

In this post, we’ll take a whirlwind tour through a bunch of such essential “micropackages”.

either

Rust has the built-in Result type, which is a sum1 of an Ok outcome or an Error.

It forms the basis of a general error handling mechanism in the language.

Structurally, however, Result<T, E> is just an alternative between the types T and E.

You may want to use such an enum for other purposes

than representing results of fallible operations.

Unfortunately, because of the strong inherent meaning of Result,

such usage would be unidiomatic and highly confusing.

This is why the either crate exists.

It contains the following Either type:

enum Either<L, R> {

Left(L),

Right(R),

}

While it is isomorphic to Result,

it carries no connotation to the entrenched error handling practices2.

Additionally, it offers symmetric combinator methods such as map_left

or right_and_then for chaining computations involving the Either values.

lazy_static

As a design choice, Rust doesn’t allow for safe access to global mutable variables. The semi-standard way of introducing those into your code is therefore the lazy_static crate.

However, the most important usage for it is to declare lazy initialized constants of more complex types:

lazy_static! {

static ref TICK_INTERVAL: Duration = Duration::from_secs(7 * 24 * 60 * 60);

}

The trick isn’t entirely transparent3, but it’s the best you can do until we get a proper support for compile-time expressions in the language.

maplit

To go nicely with the crate above —

and to act as a natural syntactic follow-up to

the standard vec![] macro —

we’ve got the maplit crate.

What it does is add HashMap and HashSet “literals” by defining

some very simple hashmap! and hashset! macros:

lazy_static! {

static ref IMAGE_EXTENSIONS: HashMap<&'static str, ImageFormat> = hashmap!{

"gif" => ImageFormat::GIF,

"jpeg" => ImageFormat::JPEG,

"jpg" => ImageFormat::JPG,

"png" => ImageFormat::PNG,

};

}

Internally, hashmap! expands to the appropriate amount of HashMap::insert calls,

returning the finished hash map with all the keys and values given.

try_opt

Before the ? operator was introduced to Rust,

the idiomatic way of propagating erroneous Results was the try! macro.

A similar macro can also be implemented for Option types

so that it propagates the Nones upstream.

The try_opt crate is doing precisely that,

and the macro can be used in a straightforward manner:

fn parse_ipv4(s: &str) -> Option<(u8, u8, u8, u8)> {

lazy_static! {

static ref RE: Regex = Regex::new(

r"^(\d{1,3})\.(\d{1,3})\.(\d{1,3})\.(\d{1,3})$"

).unwrap();

}

let caps = try_opt!(RE.captures(s));

let a = try_opt!(caps.get(1)).as_str();

let b = try_opt!(caps.get(2)).as_str();

let c = try_opt!(caps.get(3)).as_str();

let d = try_opt!(caps.get(4)).as_str();

Some((

try_opt!(a.parse().ok()),

try_opt!(b.parse().ok()),

try_opt!(c.parse().ok()),

try_opt!(d.parse().ok()),

))

}

Until Rust supports ? for Options (which is planned),

this try_opt! macro can serve as an acceptable workaround.

exitcode

It is a common convention in basically every mainstream OS that a process has finished with an error if it exits with a code different than 0 (zero), Linux divides the space of error codes further, and — along with BSD — it also includes the sysexits.h header with some more specialized codes.

These have been adopted by great many programs and languages. In Rust, those semi-standard names for common errors can be used, too. All you need to do is add the exitcode crate to your project:

fn main() {

let options = args::parse().unwrap_or_else(|e| {

print_args_error(e).unwrap();

std::process::exit(exitcode::USAGE);

});

In addition to constants like USAGE or TEMPFAIL,

the exitcode crate also defines an ExitCode alias

for the integer type holding the exit codes.

You can use it, among other things, as a return type of your top-level functions:

let code = do_stuff(options);

std::process::exit(code);

}

fn do_stuff(options: Options) -> exitcode::ExitCode {

// ...

}

enum-set

In Java, there is a specialization of the general Set interface

that works for enum types:

the EnumSet class.

Its members are represented very compactly as bits rather than hashed elements.

A similar (albeit slightly less powerful4) structure has been implemented

in the enum-set crate. Given a #[repr(u32)] enum type:

#[repr(u32)]

#[derive(Clone, Copy, Debug Eq, Hash, PartialEq)]

enum Weekday {

Monday, Tuesday, Wednesday, Thursday, Friday, Saturday, Sunday,

}

you can create an EnumSet of its variants:

let mut weekend: EnumSet<Weekday> = EnumSet::new();

weekend.insert(Weekday::Saturday);

weekend.insert(Weekday::Sunday);

as long as you provide a simple trait impl that specifies

how to convert those enum values to and from u32:

impl enum_set::CLike for Weekday {

fn to_u32(&self) -> u32 { *self as u32 }

unsafe fn from_u32(v: u32) -> Self { std::mem::transmute(v) }

}

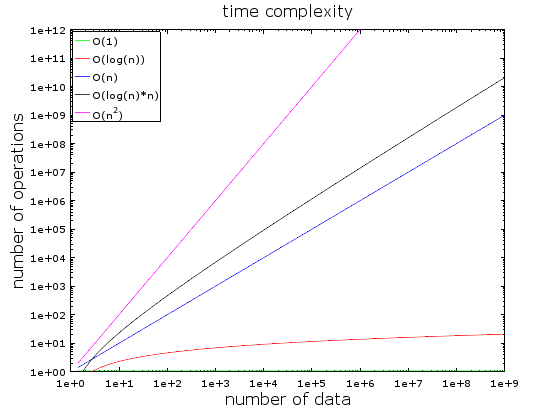

The advantage is having a set structure represented by a single, unsigned 32-bit integer, leading to O(1) complexity of all common set operations. This includes membership checks, the union of two sets, their intersection, difference, and so on.

antidote

As part of fulfilling the promise of Fearless Concurrency™,

Rust offers multiple synchronization primitives

that are all defined in the std::sync module.

One thing that Mutex, RwLock, and similar mechanisms there have in common

is that their locks can become “poisoned” if a thread panicks while holding them.

As a result, acquiring a lock requires handling the potential PoisonError.

For many programs, however, lock poisoning is not even a remote,

but a straight-up impossible situation.

If you follow the best practices of concurrent resource sharing,

you won’t be holding locks for more than a few instructions,

devoid of unwraps or any other opportunity to panic!().

Unfortunately, you cannot prove this to the Rust compiler statically,

so it will still require you to handle a PoisonError that cannot happen.

This is where the aptly named antidote crate crate offers help.

In it, you can find all the same locks & guards API that is offered by std::sync,

just without the PoisonError.

In many cases, this removal has radically simplified the interface,

for example by turning Result<Guard, Error> return types into just Guard.

The caveat, of course, is that you need to ensure all threads holding these “immunized” locks either:

- don’t panic at all; or

- don’t leave guarded resources in an inconsistent state if they do panic

Like it’s been mentioned earlier, the best way to make that happen is to keep lock-guarded critical sections minimal and infallible.

matches

Pattern matching is one of the most important features of Rust,

but some of the relevant language constructs have awkward shortcomings.

The if let conditional, for example, cannot be combined with boolean tests:

if let Foo(_) = x && y.is_good() {

and thus requires additional nesting, or a different approach altogether.

Thankfully, to help with situations like this,

there is the matches crate with a bunch of convenient macros.

Besides its namesake, matches!:

if matches!(x, Foo(_)) && y.is_good() {

it also exposes assertion macros

(assert_match!

and debug_assert_match!)

that can be used in both production and test code.

This concludes the overview of small Rust crates, at least for now.

To be certain, these crates are by far not the only ones that are small in size and simultaneously almost indispensable. Many more great libraries can be found e.g. in the Awesome Rust registry, though obviously you could argue if all of them are truly “micro” ;-)

If you know more crates in the similar vein, make sure to mention them in the comments!

-

A sum type consists of several alternatives, out of which only one has been picked for a particular instance. The other common name for it is a tagged union. ↩

-

Unless you come from Haskell, that is, where

Eitheris the equivalent of Rust’sResult:) ↩ -

You will occasionally need an explicit

*to trigger theDerefcoercion it uses. ↩ -

It only supports unitary enums of up to 32 variants. ↩